This story was originally published by Capital B, a nonprofit newsroom that centers Black voices and experiences. To read more of Adam Mahoney’s work, visit Capital B.

Ninety years ago, President Franklin D. Roosevelt and South Carolina Gov. Ibra Blackwood worked together to bring electricity to rural South Carolina. But to build the power plant that would make it happen, they destroyed the homes of 900 Black sharecropping families. With them, 6,000 graves — including those of formerly enslaved people — were removed or desecrated.

Today, as South Carolina races to power its digital future, history seems to be repeating itself, with Black communities once again paying the price for progress.

Last year, the parent companies of Facebook and Google pledged more than $4 billion for new data centers in South Carolina. Every email you send, question you ask ChatGPT, or Instagram post you share relies on these centers. However, on this new digital frontier, the health and safety of Black communities are at risk.

While state officials work to craft legislation to attract these new projects, residents and community advocates say this will ramp up environmental hazards, increase utility bills, and exacerbate health disparities. Meanwhile, experts say, the economic promise of AI remains a mirage for Black communities, widening wealth gaps and displacing workers.

“Most Black households, especially rural ones in the South, are not using AI or as much computing power, but they are having to pay for that demand in both money and dirty air,” said Shelby Green, a researcher at the Energy and Policy Institute.

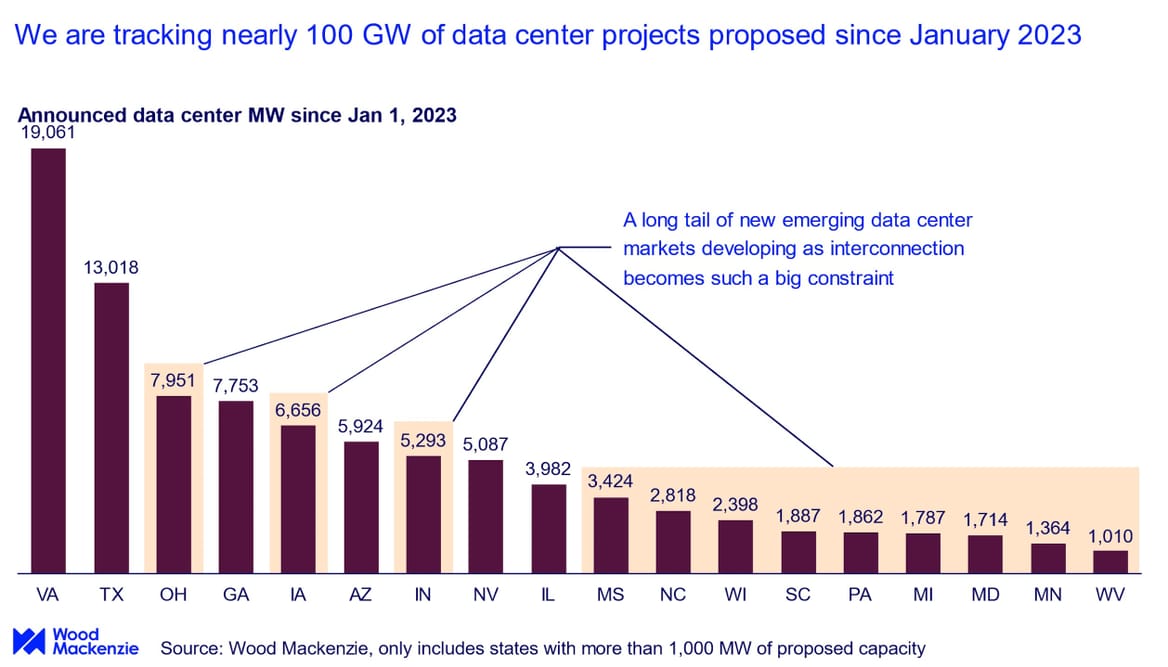

South Carolina is joining other states, like Texas and Illinois, with proposals to reopen at least two power plants in rural Black communities to run these new projects. Rural communities have begun to attract tech companies for data centers due to their low population densities, ample open space, and relatively lower energy and land costs.

Energy experts argue that the growing electricity demands from data centers are prolonging America’s dependence on dirty energy sources. Nationwide, at least 17 fossil fuel generators scheduled for closure are now delayed or at risk of delay, and about 20 new fossil fuel projects are being planned to meet data centers’ soaring energy demands. By 2040, South Carolina projects the need for four new fossil fuel power plants.

At a protest last year, Audrey Henderson, a resident of one of the towns facing the prospect of a polluting power plant, said she fears the impacts on her and her neighbors’ properties.

“My forefathers worked hard to get that property; that we have land. I have children in New York to get land when I pass away. Grandchildren and so forth and so on,” she said. The fact “they could just come in here, give us a couple of dollars, and take our land and put pipelines into it. Then we also have well water, just stuff going into the wells is very disheartening, and I’m really concerned.”

Across the country, low-income Black communities face the harshest pollution exposure from these plants, while Black workers are disproportionately in roles most vulnerable to AI and automation. A McKinsey & Co. analysis warns that if AI growth continues at its current pace, the wealth gap between Black and white households could widen by $43 billion annually within the next two decades because of disparities in who it serves.

Compounding these issues, data centers are expected to use 12% of the nation’s energy by 2028, a 550% increase from last year. An artificial intelligence search using ChatGPT, for example, uses anywhere from 10 to 30 times more energy than a regular internet search.

“The energy demand, data centers, and where the energy sector is going should not come at the expense of low-income and Black communities,” said Xavier Boatwright, an activist who has worked on environmental issues in rural South Carolina for years.

In South Carolina, officials predict data centers will drive 70% of the state’s increased energy use, with subsidies already raising utility bills for consumers. Through his canvassing across the state, Boatwright said he now regularly sees rural mobile home communities where people are paying more for their utility bills than mortgages because of this increase.

“It’s kind of like if you go out and your employer is paying for your dinner, and you order the fanciest stuff on the menu,” explained Green, who researches how rising utility bills are pushing Southern Black communities into poverty. “You don’t really have to worry about how expensive it is because it’s not coming out of your pocket. That’s how these companies are operating; they’re not holding the risk associated with increasing electricity costs and these new power plants — you are.”

In majority-Black, poor rural Fairfield County, the state is proposing to reopen a stalled nuclear plant that has long been a symbol of broken promises and financial strain for residents. Advocates warn that restarting this decades-long gamble could further burden a population already facing systemic neglect. Billions of taxpayer dollars and rising energy costs are at stake, yet the benefits of the project seem unlikely to reach those facing the worst consequences.

In South Carolina, and across the country, statistically, Black people use the least amount of electricity, yet experience the highest energy burden — meaning a larger share of their income goes toward energy bills.

In the other case, a Black stronghold in Colleton County celebrated the monumental victory of closing a coal-fired power plant in their neighborhood, which was connected to poor health outcomes for residents. Now, the state proposes to convert that very site into a gas-fired power plant to meet the energy demands of data centers. Every year, the pollution from natural gas plants is responsible for approximately 4,500-12,000 early deaths in the U.S., studies show.

“If you mapped all of the existing power plants in South Carolina, they’d follow the old path of one of the foundational pillars of the American economy through South Carolina: plantations and enslaved labor,” Boatwright said. “We’ve seen the repeated pattern of these threats in our community.”

With this boom, tech companies like Google are making huge profits by securing special deals with utility companies.

Google’s head of data center energy, Amanda Peterson Corio, said Google’s energy supply contracts undergo “rigorous review” by utility regulators and are crafted “to ensure that Google covers the utility’s cost to serve us.”

Yet, last year, the company inked a deal in South Carolina to pay less than half the rate that households pay for electricity.

These low rates, combined with tax breaks and state-approved subsidies, are used to lure big tech companies. However, these deals force local families and households to cover the cost of building extra power plants, meaning everyday customers end up footing the bill.

A data center is a combination of massive warehouses packed with rows of servers and high-tech gear that stretch longer than football fields, all humming away to store and manage the digital data we use every day. They’re so massive, for example, that Google’s first South Carolina data center, which opened in Berkeley County in 2009, uses the equivalent electricity of roughly 300,000 homes and the amount of water of at least 9,000 homes. As of 2021, it was also powered by more fossil fuel based energy sources than any of Google’s two dozen other data centers nationwide.

This uneven situation leads to a growing gap between corporate savings and community expenses, with everyday people shouldering the extra burden. Black communities, in particular, tend to face higher utility costs and, as a result, are more likely to have their power shut off for missed payments. Along the East Coast, monthly utility bills are expected to increase as much as $40 to $50, mainly due to data centers.

South Carolina legislators — Democrats and Republicans — have implored the state’s regulators to rethink discounts and other subsidies, but the push has not made waves so far.

“Current residential ratepayers are going to pay a lot, lot more because of data centers that bring almost no employees,” Chip Campsen, a Republican South Carolina state senator, said at a legislative hearing last September. Tech companies must “participate in paying the capital costs for building the generating capacity for these massive users of energy.”

This issue ties into broader government policies aimed at boosting American technological growth and making the United States a leader in artificial intelligence. The Trump administration, for example, has signaled that it might bypass some environmental regulations to speed up projects like data centers and power plants. The new leader of the Environmental Protection Agency, Lee Zeldin, has said making “the United States the Artificial Intelligence capital of the world” will be one of his five guiding pillars, along with making it easier for tech and manufacturing companies to invest in the American economy.

Near Jenkinsville, South Carolina, half-built nuclear reactors — remnants of the long-stalled V.C. Summer project — tower over a Black community where three out of four live in poverty. They stand as a stark reminder of a $9 billion investment that never produced power. Despite customers still footing the bill for this abandoned venture to the tune of multiple utility bill increases, the state-owned utility company Santee Cooper is now inviting proposals to complete one or both units. Supporters argue that reviving the project would add 2,200 megawatts to the grid — enough to power hundreds of thousands of homes — and help meet surging energy demands driven by tech giants. Recent inspections by the state’s Nuclear Advisory Council have deemed the site in excellent condition, bolstering growing legislative support.

Researchers say it would be the first and only nuclear project to restart after being halfway built. Critics caution that history warns against overly ambitious nuclear bets that can lead to decades of delays, spiraling costs, and additional burdens on customers. They contend that immediate, incremental investments in solar power and battery storage could offer a safer, more adaptable path forward rather than reinvesting in a risky long-term gamble. Currently, nuclear energy is about four times more expensive to produce than solar energy.

Last year, the state was granted over $130 million from the Biden administration for solar projects, but the funding is now in limbo under the Trump administration.

The Black-led South Carolina Energy Justice Coalition has spent years advocating for solar and wind energy in rural Black communities. “Every citizen is worthy of that type of energy by virtue of just being a human being,” Shayne Kinloch, the group’s director, said last year.

Along the banks of the Edisto River in the heart of South Carolina, a former coal-fired power plant — closed in 2013 — is poised for a dramatic transformation. Dominion Energy and Santee Cooper plan to convert the site into one of the nation’s largest gas-fired power stations, a move approved by the state’s Public Service Commission. Designed to produce up to four times the energy of the old plant, this project is a central part of efforts to retire remaining coal facilities by 2030 and transition toward a future of “reliable, affordable, and increasingly clean energy,” the companies said.

However, environmental advocates and legal experts warn that this expansion of gas infrastructure may echo a troubling legacy where vulnerable communities bear the brunt of environmental and economic risks. Shifting from coal to gas plants isn’t environmentally or cost-effective, opponents say, because while gas produces slightly less pollution than coal, it still contributes significantly to greenhouse gas emissions. It also comes with high infrastructure and maintenance costs.

This month, the state’s House of Representatives approved legislative changes that would weaken oversight of gas power plants. Joining Republicans, South Carolina’s Legislative Black Caucus Chairwoman Annie McDaniel, a Democrat representing the county home to the nuclear plant, voiced support for the legislation.

McDaniel did not respond to Capital B’s request for comment.

The changes, if passed by the state’s Senate, would allow the groups to bypass rigorous environmental reviews when proposing projects. Supporters claim these measures are necessary to meet rising energy demands from tech-driven growth. Critics argue this could sideline investments in renewable alternatives like solar and battery storage and raise the risk of rising costs, delays, and potential noncompliance with federal pollution standards.

“Instead of investing in more risky energy generation and infrastructure, they should be investing in energy solutions like solar and storage,” Boatwright said, “but utilities are choosing the most expensive and environmentally risky.”

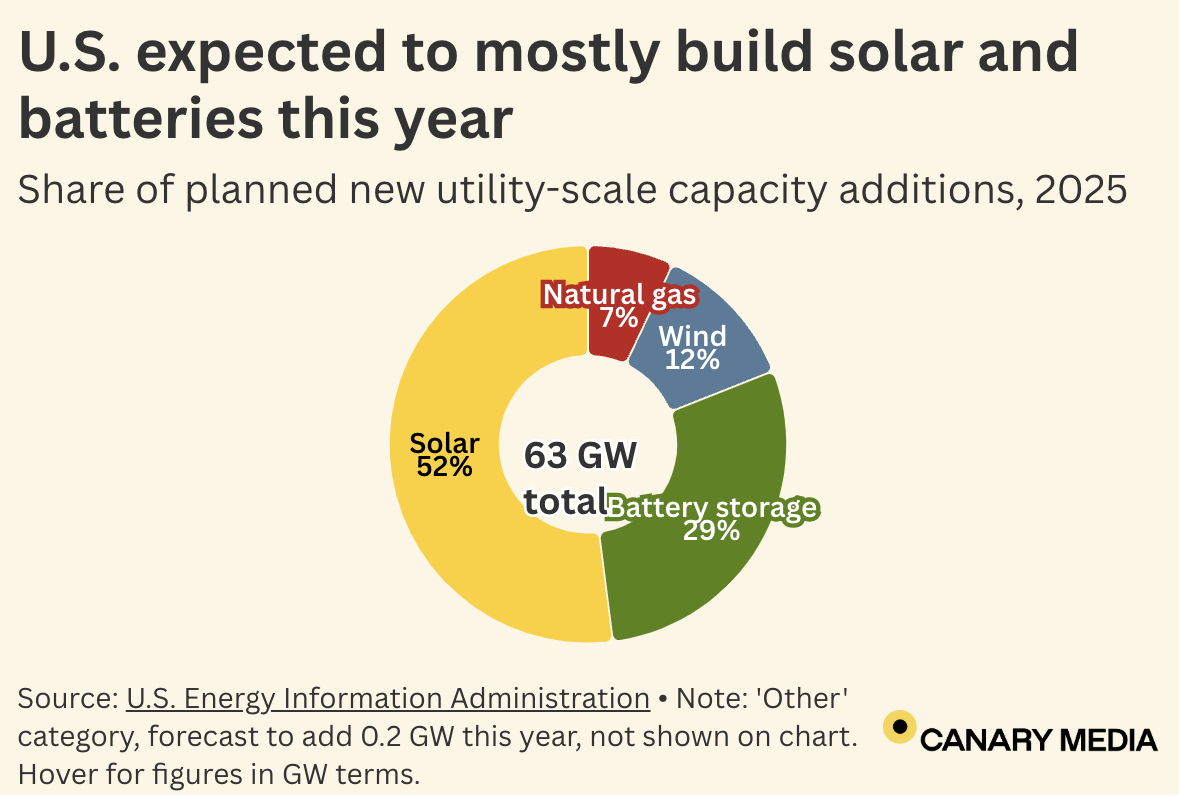

The numbers are in, and clean energy is set to sweep U.S. power plant construction yet again this year.

The U.S. is expected to build 63 gigawatts of power plant capacity this year, more than it has in decades, as new AI computing and domestic manufacturing projects cause a surge in energy demand. At this crucial juncture, plants that don’t burn fossil fuels are set to deliver 93% of all the new capacity joining the U.S. grid in 2025, per new estimates from the federal Energy Information Administration.

The new prediction is no fluke — carbon-free sources delivered nearly all the new capacity last year, too. And the trend was building for years before that.

This year, utility-scale solar is expected to continue its winning streak as the largest source of new electricity generation. More than half of new power plant capacity built this year will be solar, followed by batteries, with 29% of total capacity. That’s a step up for batteries from last year. Meanwhile, solar’s share is forecast to fall, but EIA expects more construction in absolute terms — 32.5 gigawatts compared to 30 last year.

Wind will add 12% of the new capacity, burnished by two major offshore wind projects the EIA still expects to come online despite political headwinds: Massachusetts’ 800-megawatt Vineyard Wind 1 and Rhode Island’s 715-megawatt Revolution Wind. The Trump administration unilaterally halted federal permitting for new offshore wind projects, but these are among the five that were already under construction, with necessary permits in hand.

This dominant showing from clean energy developers leaves natural gas with just 7% of new power capacity. That fossil fuel still leads in total U.S. electricity generation with about 42% of the mix but has entered a multi-year slump in terms of new construction.

The EIA predicts total gas-fired generation — the actual electricity produced — will fall 3% this year while solar generation rises by more than one-third.

This dataset offers a snapshot of where the U.S. power industry is heading — and the direction is toward cleaner, cheaper energy that mainly comes from solar and batteries.

But beyond the climate metrics, these clean power plants are proving vital in meeting the needs of an increasingly power-hungry economy. Data centers, AI hubs, and the domestic manufacturing that grew during the Biden administration all need more electricity. Renewables and batteries are the source of energy that can meet this demand most quickly and cost-effectively, though they still need to work alongside other resources to ensure 24/7 service.

A fast-growing startup is giving Texas homeowners cheap access to unusually large batteries for backup power — and paying for it by maneuvering those same batteries in the state’s ERCOT energy markets.

Base Power launched last May and already has installed more than 1,000 home batteries, around 30 megawatt-hours, in North Austin and the Fort Worth area, CEO Zach Dell told Canary Media recently. The company plans to expand that footprint to 250 megawatt-hours this year, he added.

To make good on that promise, the 80-person startup rolled out service to the Houston area last week. That move had been planned for this summer, but customers in the storm-prone metropolis were calling and emailing to sign up, and a cold snap was bearing down on Texas, testing the grid’s ability to keep pace with winter energy needs.

“We saw what happened in Winter Storm Uri, four years ago, and we want to put a solution in the hands of Texans for situations like that,” Dell said, referring to the widespread grid outages that contributed to hundreds of deaths. “As another cold front sweeps through Texas this week, we felt like pulling up the Houston launch as quickly as possible was the right thing to do.”

If a homeowner in Texas wants backup power, they could buy solar and a battery. But most battery products aren’t large enough to meet the needs of the typical American home — that’s why you see three Tesla Powerwalls lined up in some garages. At that point, the out-of-pocket cost reaches tens of thousands of dollars, unless the buyer grapples with the current state of interest rates and takes out a loan.

Base Power pitches the benefits of whole-home backup power without the massive up-front expenditure. The company designed its own battery for the express purpose of backup power, so each unit packs 25 kilowatt-hours of storage instead of the usual 10 or 15. It can instantly discharge 11.4 kilowatts of power. Some people get two of these side by side for a truly hefty home energy arsenal.

But the customers don’t buy this product: They pay a $495 installation fee and an ongoing monthly fee of $16. They also choose Base Power as their electricity retailer — Texas allows customers to pick who they buy from — and pay 8.5 cents per kilowatt-hour for their general household consumption.

If there’s no such thing as a free lunch, nor is there nearly free backup power. Base Power (and, by extension, its venture backers) fronts this rather hefty bill, on the premise that it can make money not just from customer subscriptions but by bidding the decentralized battery fleet into the ERCOT energy markets. That also lets Base Power charge a lower rate for household electricity than it otherwise would need to.

“We’re a battery developer; we’re an asset owner,” Dell explained. “For us and for the customer, a bigger battery is better.”

In that sense, this company is the newest in a lineage of startups seeking to unlock the multi-layered benefits of distributed energy devices, which both help a local customer and, when harnessed with effective software and amenable market rules, make the overall grid more clean and efficient.

Many startups have gone bankrupt chasing this rosy vision. Base Power aims to avoid their fate by adopting a very old technique in the utility sector: vertical integration.

In order to make its customer-friendly product into a viable business, Dell and company have taken control of every step of their value chain, rather than outsourcing or partnering.

The company designed its own battery hardware, giving it far more capacity than the market-leading home battery systems. Base Power wrote its own software to govern the batteries and operate them as a decentralized fleet bidding into the wholesale markets. And the startup does its own sales, installations, and long-term maintenance.

The corporate strategy, Dell explained, is to create “compounding cost advantage through vertical integration.” If Base Power bought, say, Tesla Powerwalls and resold them to customers, it would have to give Tesla a margin. If it paid outside firms to knock on doors and pitch batteries, those commercial evangelists would also take their cut. Contracting out for installation further dilutes the profits, and so on.

“Because we do all these things, we can take cost out of every part of the system and then pass those savings down to the customer in the form of low prices,” Dell said. “As our returns go up and our cost of capital goes down, our intention is to build the largest and most capital-efficient portfolio of batteries in the country.”

Minimizing cost and reliance on outside parties makes fundamental sense, and yet fledgling startups typically shy away from taking on so much for fear of biting off more than they can chew.

“It’s really hard,” Dell admitted. However, “because it’s so hard, there’s not a lot of people who can do it.”

It’s a business strategy that calls to mind the ancient bristlecone pines that occupy a remote, arid mountaintop between the eastern Sierras and California’s border with Nevada. The trees suffer extremes of heat and cold and thirst and wind, but when they persist, they carve out a niche where few competitors can survive. The oldest bristlecones predate the pyramids of Giza.

The do-it-all approach also distinguishes Base Power from others that are similarly trying to get more batteries into people’s homes.

German company sonnen has been working on this challenge for over a decade and operates a vast network of home batteries in Germany that make money in power markets there. In the U.S., the company partners with solar companies and sometimes with real estate developers to sell its batteries. Sonnen launched a no-money-down battery offering in Texas with a company called Solrite, which had put equipment in more than 1,000 homes as of January, a similar volume to what Base Power has installed.

Neither sonnen nor Solrite are retail electricity providers in Texas, though, so they need to pair up with companies that buy and sell power and can monetize the batteries’ ability to arbitrage. The Solrite deal requires people to sign up for 25 years and buy out any remaining value if they want to quit before the quarter-century mark. Base Power, in contrast, asks customers to commit to a three-year retail contract, and the cancellation fee is $500, to cover removing the battery system.

Other climatetech-savvy retailers offer special deals for people who buy their own batteries. Great Britain’s Octopus Energy has entered the ERCOT market and offers modest monthly credits per kilowatt-hour of storage capacity if residents let the company manage their home batteries. Octopus uses its software to shift consumption to times with abundant renewable generation, thereby lowering the cost of serving those households.

Startup David Energy offers retail plans in which the company optimizes customers’ battery usage to minimize their overall electricity bill. Tesla itself opened a Texas electricity retailer subsidiary that pays customers a fixed credit of $400 per year for each Powerwall pack that they allow to discharge to the grid, up to three Powerwalls.

Those providers still need customers to front the money or take out a loan to install their own batteries, which constrains how quickly battery adoption can grow. On the other hand, that model means those companies can focus on honing their energy software and trading strategy and don’t have to spend millions of dollars to install and own batteries that might one day pay for themselves.

Base Power pays for its buildout with a mix of equity, debt, and tax credits, Dell noted. Investors funded an $8 million seed raise led by Thrive Capital and a $60 million Series A led by Valor Equity Partners. As for making money, Base Power uses the batteries to arbitrage energy in the ERCOT market from the renewables-filled times of plenty to the valuable hours of scarce supply. The company is currently undergoing qualification to bid ancillary services, a more complex suite of market offerings that maintain the quality and reliability of the grid.

This is the fourth and final article in our series “Boon or bane: What will data centers do to the grid?”

Tom Wilson is aware that the explosive growth of data centers could make electricity costlier and dirtier. As a principal technical executive at the Electric Power Research Institute, the premier U.S. utility research organization, he’s studied the risks himself.

But he also thinks conversations about the problem tend to miss a key point. Data centers could also make the grid cleaner and cheaper by embracing a simple concept: flexibility.

“Data centers are not just load — they can also be grid assets,” he said. Turning that proposition into reality is the goal of his most recent project, DCFlex, a collaborative effort to get data centers to “support the electric grid, enable better asset utilization, and support the clean energy transition.”

DCFlex is short for “data center flexibility,” a term that encompasses all the ways that these sprawling campuses and buildings full of servers, cooling equipment, power-control systems, backup generators, and batteries can reduce or shift their power use.

Since its October launch, DCFlex has grown from 15 to 37 funding participants. On the data-center side are tech “hyperscalers” like Google, Meta, and Microsoft; major data center developers like Compass and QTS; and AI computing and power equipment suppliers like Nvidia and Schneider Electric.

On the grid side are utilities such as Duke Energy, Pacific Gas & Electric, Portland General Electric, and Southern Company; power plant owners like Constellation Energy, NRG Energy, and Vistra; and five of the continent’s seven grid operators, which manage energy markets serving electricity to two-thirds of the U.S. population.

The range of participants reflects the broad interest in solving the pressing challenge of powering the data centers being proposed around the country without driving up grid costs and emissions.

That won’t be easy. From Virginia’s “Data Center Alley” to emerging hot spots in Arizona, Georgia, Indiana, Ohio, and beyond, utilities are being inundated with demands for round-the-clock power from data center projects that can add the equivalent of a small city’s electricity consumption within a few years. Meanwhile, it usually takes four or five years to connect new power plants to the grid.

Flexibility could make a big difference, however, said Tom Wilson, who’s worked on climate and energy issues for more than four decades, including advising projects at the Massachusetts Institute of Technology and Stanford University and serving at the White House Office of Science and Technology Policy during the Biden administration.

That’s because the impacts of massive new utility customers like data centers are tied not just to how much power they need but specifically to when they need it.

Utilities live and die by the few hours per year when demand for electricity peaks — usually during the hottest and coldest days. By refraining from using grid power during those peak hours, new data centers could significantly reduce their impact on utility costs and carbon emissions.

If data centers and other big electricity customers committed to curtailing their power use during peak hours, it could unlock tens of gigawatts of “spare” capacity on U.S. grids, according to a recent analysis from Duke University’s Nicholas Institute for Energy, Environment & Sustainability.

Realizing that spare capacity will be challenging, though. For starters, every new large power customer would have to agree not to use grid power during key hours of the year, which is far from a realistic expectation today. What’s more, utilities would need some serious proof that those big customers can actually follow through with promises to not use power during those peak times before letting them connect, because broken promises in this case could lead to overloaded grids or forced blackouts.

And flexibility can’t solve all the power problems that massive data center expansion could cause.

In a December report, consultancy Grid Strategies found that key data center markets are driving an unprecedented fivefold increase in the amount of new power demand that U.S. utilities and grid operators forecast over the next half decade or so. While that analysis “really focused on the peak demand forecast,” the sheer amount of power needed over the course of a year is “potentially just as big of a story,” said John Wilson, Grid Strategies’ vice president.

Still, for utilities struggling to plan and build the generation and grid infrastructure needed to support data centers, flexibility is worth exploring. That’s because data centers could make their operations flexible a lot faster than utilities can expand power grids and build power plants.

It typically takes seven to 10 years to build high-voltage transmission lines and four to five years to build a gas-fired power plant — “even in Texas,” Tom Wilson pointed out. The concept of relying on big customers to avoid using power instead of building all that infrastructure is just starting to take hold in utility planning, but it could play a major role in managing the surge in power demand.

Flexible data centers may also be able to secure space on capacity-constrained grids more quickly than inflexible competitors, Tom Wilson said. A U.S. Department of Energy report released last year included interviews with dozens of utilities, and one key takeaway was that “electricity providers often can accommodate the energy and capacity requests of a data center for (say) 350 days but need to find a win-win solution for the remaining 15 days.”

“If you have two projects in the queue, and one says they can be flexible and the other says they can’t be flexible, and they’re about the same size, then the one that can be flexible is more likely to be successful,” Tom Wilson said.

That’s not lost on Brian Janous, cofounder of Cloverleaf Infrastructure, which develops what the company describes as “clean-powered, ready-to-build sites for the largest electric loads,” mainly data centers.

“You need to understand, when a utility says, ‘I can’t get you power,’ what they mean is, ‘There are certain hours of the day I can’t get you power,’” he said. The data center industry “lacks visibility into this, which is kind of shocking,” given that data center flexibility is nothing new.

In fact, back in 2016, when he worked as energy strategy director at Microsoft, Janous helped structure a deal for a data center in Cheyenne, Wyoming, to use fossil-gas-fired backup generators to reduce peak grid stress for utility Black Hills Energy. That promise, combined with Microsoft’s agreement to purchase nearly 240 megawatts of wind power, got that deal over the line.

Janous thinks many utilities are eager for similar propositions today. One unnamed utility executive told him recently that the backlog for connecting large data centers to its grid is now at least five years. “I asked, ‘What if the data center could be dispatchable?” And he said, ‘Oh, we could connect them tomorrow. But nobody’s asking me that.’”

Getting utilities and data centers together to ask those kinds of questions is what DCFlex is all about. Project partners are now developing five to 10 demonstration projects, Tom Wilson said, none of which have been announced. But he described the scope of work as ranging from the development stage to “existing sites that are ready to roll.”

As for how these projects will help the grid, he laid out two broad methods: They’ll use on-site power generation or storage to replace what they’d otherwise pull from the grid, or they’ll use less electricity during peak hours.

Janous thinks on-site generation is the simpler approach. To some extent, it’s already happening today but typically with dirty diesel generators. Janous, Tom Wilson, and other experts say these diesel generators are not a viable option for hyperscalers, however. They’re simply too dirty and too expensive to rely on, except during grid outages or other dire situations.

Biodiesel and renewable diesel could work for some smaller data centers, Tom Wilson said. But it’s not yet clear whether air-quality rules would permit generators burning those fuels to run during nonemergencies. Nor are the economics viable for larger-scale data centers, he said.

Fossil-gas-fired backup generators like those Microsoft used in Cheyenne are another option — albeit one that still pollutes the local air and warms the planet. Still, a growing number of data center developers are looking to use them as a workaround to grid constraints. “We’re in the process of developing sites in many parts of the country,” Janous said. “Every one of them has access to natural gas.”

It would be a problem for the planet — and for meeting the climate goals major tech companies have committed to — if data centers planned to use fossil gas for a majority of their power. But if relied on sparingly and strategically, this choice might be less harmful than the alternatives: If a data center burns fossil gas just to power itself during grid peaks, that might reduce pressure on utilities to keep old coal-fired power plants open or to build much larger gas-fired plants that would lock in emissions for decades.

Other gas-fueled options for on-site power might be less damaging to the climate — although this remains a hotly debated topic. Microgrid developer Enchanted Rock plans to install gas generators at a Microsoft data center in San Jose, California, which will burn regular fossil gas but will offset that usage by purchasing an equivalent amount of “renewable natural gas” — methane captured from rotting food waste. It claims this will make the project emissions-free.

And utility American Electric Power has signed an agreement to buy 1 gigawatt of fuel cells from Bloom Energy, which it plans to install at data centers. The firm’s fuel cells still emit carbon dioxide, but they avoid the harmful nitrous oxides caused by burning gas.

Batteries are another option — and the one that has the greatest potential to be clean. Most data centers have some batteries on-site to help computers ride through grid disruptions until backup generators can turn on, but relying on batteries for backup power and to provide grid support is a far more complex and costly endeavor.

“There are all kinds of trade-offs in terms of reliability, in terms of emissions, in terms of cost, in terms of the physical footprint,” Tom Wilson said. “In a storm situation that brought the grid down, you’d want something that can be dependable.”

A small but growing number of hyperscalers are looking to batteries for both backup power and grid flexibility. Google’s battery-backed data center in Belgium is one example.

“We built out battery storage as a way to displace part of our diesel gensets, to provide grid services, and to provide relief during times of extreme grid stress when we needed backup,” said Amanda Peterson Corio, Google’s global head of data center energy.

In the U.S., a Department of Energy grant is supporting a battery installation at an Iron Mountain data center in Virginia that’s meant to test the potential to store clean power for backup and grid-support uses.

It’s one of a number of DOE programs launched under the Biden administration whose purpose is to explore ways that “battery energy storage systems can provide similar levels of reliability, and without a lot of the challenges that diesel gensets or other backup power sources have,” Avi Shultz, director of the DOE’s Industrial Efficiency and Decarbonization Office, said in an October interview.

The other big idea for making data centers flexible focuses not on the power they can generate and store but on the power they use, Shultz said.

“Demand response” is the utility industry term for the practice of throttling power use during times of peak grid stress, or of shifting that power use to other times when the grid can handle it better, in exchange for payments from utilities or revenues in energy markets.

Historically, data centers haven’t been interested in standard demand-response programs and markets. The value of what they do with that electricity is just too high compared with the potential rewards.

But if a data center’s participation in a demand-response program is the difference between it getting a grid connection or not, the programs become a lot more appealing.

Shultz highlighted two key data center tasks that are particularly ripe for load flexibility.

The first is “cooling loads and facility energy demand,” he said. Data centers use enormous amounts of electricity to keep their servers and computing equipment from overheating, and quite a bit of that energy is lost in the process of converting from high-voltage grid power to the low-voltage direct current that computing equipment uses.

Data center operators have invested heavily to make this cooling and power conversion more efficient in recent decades. Further advances in efficiency — and technologies that can store power for cooling for later use — could become “part of the routine best practices of data center operations,” Shultz said.

The second big target for load flexibility is “the core of the computational operation itself,” he said. Not all data centers need to run their computing equipment 24 hours a day, and “they may not be being operated in a way that’s optimized from an energy point of view. I think there’s an opportunity there to develop more innovative and flexible operational processes.”

Again, this isn’t a new idea. Cryptocurrency mining operations in Texas have been earning millions of dollars for not using electricity during grid emergencies, even as lawmakers, regulators, and neighbors of crypto mining operations have been raising alarms over that industry’s rising hunger for power. Google has also carried out some version of this through its “carbon-intelligent computing” program, which shifts certain data center operations to use cleaner power. In recent years, Google has touted how it can also shift computing load to relieve grid stress.

“Our carbon-aware computing platform started as how we can shift nonurgent compute loads to times when the grid is more clean,” Google’s Peterson Corio said. “We’ve also done that to support utility partners in times of extreme weather events.”

But last year’s DOE report on data center power use stated that aside from Google’s activities, contributors to its research “identified no examples of grid-aware flexible operation at data centers today” in the U.S. That absence of evidence “may result from the fact that electricity providers only recently started having to say no to data center interconnection requests.”

Plus, not every data center conducts tasks that can be easily postponed, Tom Wilson emphasized. “Data centers are all different. If you are Visa, in Virginia, their data center is transacting, I think, 80,000 credit approvals per second. You don’t want to say, ‘I’ll approve your credit when the sun is shining.’ That’s the kind of thing where you don’t have much flexibility. You need to deliver.”

Other tasks are better suited, he said, including, critically, much of the work of training the AI models that constitute the largest single source of increasing power demand from data centers. “There is potentially flexibility there — and a fair amount of it.”

Taking advantage of that flexibility is the idea behind Verrus, a company launched last year by Sidewalk Infrastructure Partners, a firm spun out of Alphabet. Traditional data centers separate their computing capacity into individual “halls,” each of which has its own power conversion, cooling, and backup generation. Verrus, by contrast, is planning data center complexes with “a centralized battery in the center of all the data halls, with a sophisticated microgrid controller that allows it to think about all the data halls as interruptible and schedulable,” Jonathan Winer, a Sidewalk Infrastructure Partners cofounder and the former co-CEO, said in an interview last year.

Not every “hall” will be dedicated to tasks that can be interrupted, he explained. But some of them will be — and “with AI training, I can press pause on it. These are multi-day, sometimes multi-week training runs, and you can press pause and resume.”

Verrus hasn’t built one of these data centers yet, but it is targeting Arizona, California, and Massachusetts as its first markets. In a white paper last year, Sidewalk Infrastructure Partners explained why this kind of flexibility is a must for new data centers. As Winer said, “The data center industry has realized how much it needs to be a power-led model.”

It’s not clear how many data center developers are building flexibility into their grid interconnection requests to utilities. But that doesn’t mean it’s not happening.

Data center developers tend to be secretive about their hunt for sites and the nature of the discussions they have with utilities. Developers may be talking about flexibility with one utility while also shopping around for a more traditional interconnection that gives them access to the power they want at all hours of the day.

Flexibility deals are most likely to emerge in data center markets where unimpeded interconnections simply aren’t possible anymore due to grid constraints, said Aaron Bilyeu, Cloverleaf’s chief development officer. What’s changed is that “we’ve quickly run out of those opportunities,” he said.

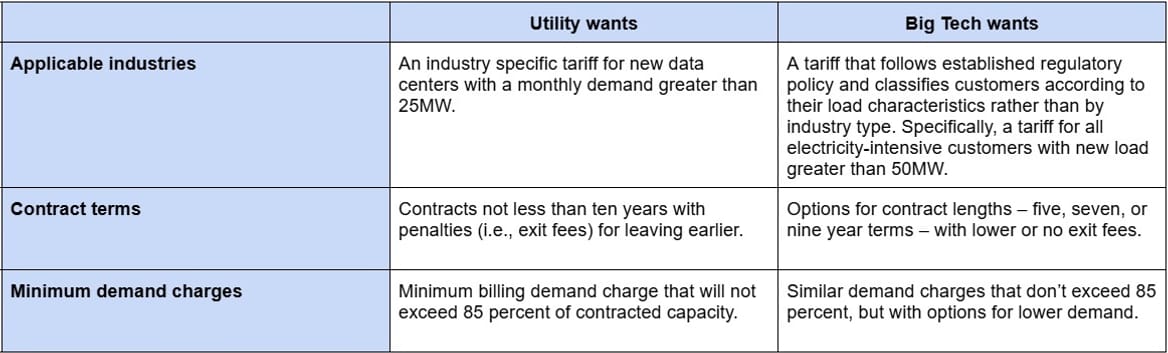

Utilities in grid-constrained parts of the country are beginning to take on the complexities of creating “flexible interconnection” policies and tariffs — the rules and rates for customers — that could provide data center developers some clarity on what a commitment to flexible operations could be worth to them. That’s a big part of the work underway at DCFlex, Tom Wilson said.

“We have three main workstreams. The first is aimed at defining the flexibilities that are possible and creating a taxonomy — each utility and RTO [regional transmission organization] has different words for the same things,” he said. “The second piece is aimed at incentives, rate structures, and regulatory issues on the utility side, looking at how they could effectively orchestrate flexibility.”

“The third piece is how to build the planning and operational tools that incorporate flexibility,” he added. That means figuring out how to calculate the impact of flexible versus non-flexible large customers on long-term planning for power plants, grids, and other infrastructure investments, which traditional planning processes don’t do today. “You need new tools, or to evolve tools, to think about and utilize the new opportunities that flexibility can provide.”

That work will be critical to giving regulators the tools they need to challenge utilities’ claims that they must build new fossil-gas power plants and keep coal plants open to serve peak loads, the Sierra Club wrote in a September report. Big customers “can have an outsized impact in avoiding new fossil fuel investment (or enabling coal retirements) by participating in demand management programs that allow utilities to subtract some or all of the customer’s load from its peak obligation,” the report notes.

That aligns with the needs of tech companies like Amazon, Google, Meta, and Microsoft that have aggressive clean energy goals. These companies are negotiating with utilities in hot data center markets like Georgia, arguing that letting data centers build clean energy, on-site power, and load flexibility into load forecasts could obviate some of the new dirty power that utility Georgia Power wants to build.

Putting the question of clean versus dirty power aside, data center developers can’t expect utilities and regulators to allow the costs of supplying them with round-the-clock power to fall on regular customers’ shoulders, Bilyeu said. “We believe data centers should pay their own way.”

This is the third article in our four-part series “Boon or bane: What will data centers do to the grid?”

The world’s wealthiest tech companies want to build giant data centers across the United States to feed their AI ambitions, and they want to do it fast. Each data center can use as much electricity as a small city and cost more than $1 billion to construct.

If built, these data centers would unleash a torrent of demand for electricity on the country’s power grids. Utilities, regulators, and policymakers are scrambling to keep pace. If they mismanage their response, it could lead to higher utility bills for customers and far more carbon emissions. But this mad dash for power could also push the U.S. toward a cleaner and cheaper grid — if tech giants and other data center developers decide to treat the looming power crunch as a clean-power opportunity.

Utilities from Virginia to Texas are planning to build large numbers of new fossil-gas-fired power plants and to extend the life of coal plants. To justify this, they point to staggering — but dubious — forecasts of how much electricity data centers will gobble up in the coming years, mostly to power the AI efforts of the world’s largest tech companies.

Most of the tech giants in question have set ambitious clean energy goals. They’ve also built and procured more clean power than any other corporations in the country, and they’re active investors in or partners of startups working on next-generation carbon-free energy sources like advanced geothermal.

But some climate activists and energy analysts believe that given the current frenzy to build AI data centers, these firms have been too passive — too willing to accept the carbon-intensive plans that utilities have laid out on their behalf.

It’s time, these critics say, for everyone involved — tech giants, utilities, regulators, and policymakers — to “demand better.” That’s how the Sierra Club put it in a recent report urging action from Amazon, Google, Meta, Microsoft, and other tech firms driving data center growth across the country.

“I’m concerned the gold rush — to the extent there’s a true gold rush around AI — is trumping climate commitments,” said Laurie Williams, director of the Sierra Club’s Beyond Coal campaign and one of the report’s authors.

Williams isn’t alone. Climate activists, energy analysts, and policymakers in states with fast-growing data center markets fear that data center developers are prioritizing expediency over solving cost and climate challenges.

“I think what we’re seeing is a culture clash,” she said. “You have the tech industry, which is used to moving fast and making deals, and a highly regulated utility space.”

Some tech firms intend to rely on unproven technologies like small modular nuclear reactors to build emissions-free data centers, an approach that analysts say is needlessly unreliable. Others want to divert electricity from existing nuclear plants — as Amazon hopes to do in Pennsylvania — which simply shifts clean power from utility grids to tech companies. Yet others are simply embracing new gas construction as the best path forward for now, albeit with promises to use cleaner energy down the road, as Meta is doing in Louisiana.

Meanwhile, several fossil fuel companies are hoping to convince tech firms and data center developers to largely avoid the power grid by building fossil-gas-fired plants that solely serve data centers — an idea that’s both antithetical to climate goals and, according to industry analysts, impractical.

But a number of tech firms and independent data center developers are pursuing more realistic strategies that are both affordable and clean in order to meet their climate goals.

These projects should be the model, clean power advocates say, if we want to ensure the predicted AI-fueled boom in energy demand doesn’t hurt utility customers or climate goals.

And ideally, the companies involved would go even further, Williams said, by engaging in utility proceedings to demand a clean energy transition, by bringing their own grid-friendly “demand management” and clean power and batteries to the table, and by looking beyond the country’s crowded data center hubs to places with space to build more solar and wind farms.

The basic mandate of utilities is to provide reliable and affordable energy to all customers. Many utilities also have mandates — issued by either their own executives or state policymakers — to build clean energy and cut carbon emissions.

But the scale and urgency of the data center boom has put these priorities on a collision course.

As the primary drivers of that conflict, data centers have a responsibility to help out. That’s Brian Janous’ philosophy. He’s the cofounder of Cloverleaf Infrastructure, a developer of sites for large power users, including data centers. Cloverleaf is planning a flagship data center project in Port Washington, a city about 25 miles north of Milwaukee.

Cloverleaf aims to build a data center campus that will draw up to 3.5 gigawatts of power from the grid when it reaches full capacity by the end of 2030, “which we think could be one of the biggest data center projects in the country,” Janous said. That’s equivalent to the power used by more than 2.5 million homes and a major increase in load for the region’s utility, We Energies, to try to serve.

Together with We Energies and its parent company, WEC Energy, Cloverleaf is working on a plan that, the companies hope, will avoid exposing utility customers to increased cost and climate risks.

“The utility has done a great job of building a very sustainable path,” Janous said. WEC Energy and Cloverleaf are in discussions to build enough solar, wind, and battery storage to meet more than half the site’s estimated energy needs. The campus may also be able to tap into zero-carbon electricity from the Point Beach nuclear power plant, which is now undergoing a federal relicensing process, he said.

The key mechanism of the deal is what Janous called a “ring-fenced, bespoke tariff.” That structure is meant to shield other utility customers from paying more than their fair share for infrastructure built to meet data centers’ demand.

“This tariff puts it completely in the hands of the buyer what energy mix they’re going to rely on,” he said. That allows Cloverleaf — and whatever customer or customers end up at the site it’s developing — to tap into the wind, solar, and battery storage capacity WEC Energy plans to build to meet its clean energy goals.

To be clear, this tariff structure is still being finalized and hasn’t yet been submitted to state utility regulators, said Dan Krueger, WEC Energy’s executive vice president of infrastructure and generation planning. But its fundamental structure is based on what he called a “simple, just not easy,” premise: “If you come here and you say you’ll pay your own way”— covering the cost of the energy and the transmission grid you’ll use — “we invest in power plants” to provide firm and reliable power.

“We make sure we can get power to the site, we make sure we have enough capacity to give you firm power, and then we start lining up the resources that can help make you green,” he said.

WEC Energy’s broader plans to serve its customers’ growing demand for power haven’t won the backing of environmental advocates. The Sierra Club is protesting the utility’s proposal to build or repower 3 GW of gas-fired power plants in the next several years, and has pressed Microsoft, which is planning its own $3.3 billion data center in We Energies territory, to engage in the state-regulated planning process to demand cleaner options.

Krueger said that the gas buildout is part of a larger $28 billion five-year capital plan that includes about $9.1 billion to add 4.3 GW of wind, solar, and battery capacity through 2029. That plan encompasses meeting new demand from a host of large customers including Microsoft, but it doesn’t include the resources being developed for Cloverleaf.

Janous said he agreed with the Sierra Club’s proposition that “the biggest customers should be using their influence to affect policy.” At the same time, Cloverleaf is building its data center for an eventual customer, and “our customers are looking for speed, scale, and sustainability,” in that order. Cementing a tariff with a host utility is a more direct path to achieving this objective, he said.

Similar developer partnerships between utilities and data centers are popping up nationwide.

In Georgia, the Clean Energy Buyers Association and utility Georgia Power are negotiating to give tech companies more freedom to contract for clean energy supplies. In North Carolina, Duke Energy is working with Amazon, Google, Microsoft, and steelmaker Nucor to create tariffs for long-duration energy storage, modular nuclear reactors, and other “clean firm” resources. In Nevada, utility NV Energy and Google have proposed a “clean transition tariff,” which would commit both companies to securing power from an advanced geothermal plant that Fervo Energy is planning.

“In the near term, to get things going quickly, we’re looking at solar and wind and storage solutions,” said Amanda Peterson Corio, Google’s global head of data center energy. “As we see new firming and clean technologies develop, we’ve planted a lot of seeds there,” like the clean transition tariff.

Insulating utility customers from bearing the costs of data center power demand is a core feature of these tariffs. Broader concerns over the costs that unchecked data center growth could impose have triggered pushback from communities, politicians, and regulators in data center hot spots. But Janous highlighted that Cloverleaf’s Wisconsin project will have “no impact on existing ratepayers — 100% of the cost associated with the site will flow through this tariff.”

That’s also good utility-ratemaking policy, said Chris Nagle, a principal in the energy practice at consultancy Charles River Associates, who worked on a recent report on the challenge of building what he described as “adaptable and scalable” tariffs that can apply to data centers across multiple utilities. “In the instances where one-off contracts or schedules are done, they should be replicable,” he said.

At the same time, Nagle continued, “each situation is different. Some operators may place more value on sourcing from carbon-free resources. Others may value cost-effectiveness more. Utilities may have sufficient excess generation capacity, or they may have none at all.”

Right now, top-tier tech companies appear willing to pay extra for clean power, said Alex Kania, managing director of equity research at Marathon Capital, an investment banking firm focused on clean infrastructure. He pointed to reports that Microsoft is promising to pay Constellation Energy roughly twice the going market price for long-term electricity supply for the zero-carbon power it expects to secure from restarting a unit of the former Three Mile Island nuclear plant.

Given that willingness to pay, “I think these hyperscalers could go further,” Kania said, using the common term for the tech giants like Amazon, Google, Meta, and Microsoft that have the largest data centers. With their scale, these companies can “go to regulators and say, ‘We’re going to find a way for utilities to grow and make these investments but also hold rates down for customers.’”

But cost is not the primary barrier to building clean power today.

In fact, portfolios of new solar, wind, and batteries are cheaper than new gas-fired power plants in most of the country. Instead, the core barrier to getting clean power online — be it for data centers or other large-scale power buyers, or even just for utilities — is the limited capacity of the power grid itself.

Across much of the U.S., hundreds of gigawatts of solar, wind, and battery projects are held up in yearslong waitlists to get interconnected to congested transmission grids. Facing this situation, some data center developers are targeting parts of the country where they can build their own clean power and avoid as much of the grid logjam as possible.

In November, for example, Google, infrastructure investor TPG Rise Climate, and clean power developer Intersect Power unveiled a plan to invest $20 billion by 2030 into clusters of wind, solar, and batteries that are largely dedicated to powering newly built data centers.

With the right balance of wind, solar, and batteries, topped off by power from the connecting grid or from on-site fossil-gas generators, Intersect Power CEO Sheldon Kimber says this approach can be “cleaner than any part of the grid. You’re talking 80% clean energy.”

And importantly, that clean energy is “all new and additional,” Google’s Peterson Corio said. That’s important for her employer, which wants to “make sure any new load we’re building, we’re building new generation to match it.”

Think tank Energy Innovation has cited this “energy park” concept as a neat solution to the twin problems of grid congestion and ballooning power demand. Combining generation and a big customer behind a single interconnection point can “speed up development, share costly onsite infrastructure, and directly connect complementary resources,” policy adviser Eric Gimon wrote in a December report.

And while many existing data center hubs aren’t well suited to energy parks, plenty of other places around the country are, said Gary Dorris, CEO and cofounder of energy analysis firm Ascend Analytics. Swaths of the Great Plains states, “roughly from Texas to the Dakotas,” offer “the combination of wind and solar, and then storage, to get to close to 100%” of a major power customer’s electricity needs, he said.

That’s not to say that building these energy parks will be simple. First, there’s the sheer amount of land required. A gigawatt-scale data center may occupy a “couple hundred acres,” Kimber said, but powering it will take about 5,000 acres of solar and another 10,000 for wind turbines.

And then there are the regulatory hurdles involved. Almost all U.S. utilities hold exclusive rights to provide power and build power-delivery infrastructure within the territories they serve. The exception is Texas, which has a uniquely competitive energy regulatory regime. Intersect Power plans to build its first energy park with Google and TPG Rise Climate in Texas, and the partners haven’t disclosed if they’re working on projects in any other states.

Cloverleaf’s Janous highlighted this and other constraints to the energy-park concept.

Getting the workforce to build large-scale projects in remote areas is another challenge, he added, as is accessing the fiber-optic data pipelines needed by data centers serving time- and bandwidth-sensitive tasks.

“We think the market for those sorts of deals is relatively small,” he said.

On the other hand, the task of training AI systems, which many hyperscalers are planning to dedicate billions of dollars to over the next few years, doesn’t require the same bandwidth or latency and can be “batched” to run at times when power is available.

“Historically a lot of data centers have landed close to each other to make communications faster, but it isn’t clear that the data centers being built today have those same constraints,” said Jeremy Fisher, principal adviser for climate and energy at the Sierra Club’s Environmental Law Program and co-author of Sierra Club’s recent report. “To the extent that the AI demand is real, those data centers should be closer to clean energy and contracting with new, local renewable energy and storage to ensure their load isn’t met with coal and gas.”

The Sierra Club and other climate advocates would prefer data center demand is met with no new fossil-fuel power at all. But few, if any, industry analysts think that is realistic. So, the question becomes how much gas will be necessary.

A growing number of companies are targeting data centers as potential new customers for gas-fired power, including oil and gas majors. ExxonMobil announced plans to enter the power-generation business in December, proposing to build a massive gas-fired power plant dedicated to powering data centers. A partnership between Chevron, investment firm Engine No. 1, and GE Vernova launched last month with a promise to build the country’s “first multi-gigawatt-scale co-located power plant and data center.”

President Donald Trump has also backed this idea of building fossil-fuel power plants for data centers. “I’m going to give emergency declarations so that they can start building them almost immediately,” he told attendees of the World Economic Forum in Davos, Switzerland, last month. “You don’t have to hook into the grid, which is old and, you know, could be taken out. … They can fuel it with anything they want. And they may have coal as a backup — good clean coal.”

The federal government doesn’t regulate utilities and power plants, however — states do. And even if that weren’t the case, Janous and Intersect Power’s Kimber agreed that building utility-scale gas power plants solely for data centers is a nonstarter. “We’ve been pitched so many projects on building behind-the-meter combined-cycle gas plants,” Janous said. “We think that’s absolutely the wrong approach.”

Kimber said that Intersect Power’s energy-parks concept does include gas-fueled generators. But they’ll be relatively cheap, allowing them to earn their keep even if run infrequently to fill the gaps at times when solar, wind, and batteries can’t supply power. Eventually they can be replaced with next-generation storage technologies.

That’s quite different from building a utility-scale power plant that must run most of the time for decades to pay back its cost, he said. “Our solution is more dynamic: It exhibits less lock-in, and it’s faster and more practical.”

Nor can large-scale gas power plants be built quickly enough to match the pace that developers have set for themselves to get data centers up and running. Multiple energy analysts have repeated that point over the past year, as have the companies that make and deploy the power plant technologies, like GE Vernova, which is reporting a three-year, $3 billion backlog for its gas turbines.

Utility holding company and major clean energy developer NextEra Energy announced last month that it was moving into building gas power plants with GE Vernova. But CEO John Ketchum noted in an earnings call that gas-fired generation “won’t be available at scale until 2030 and then, only in certain pockets of the U.S.,” and that costs of those power plants have “more than doubled over the last five years due to the limited supply of gas turbines.” Renewables and batteries, by contrast, “are ready now to meet that demand and will help lower power prices.”

Marathon Capital’s Kania agreed with this assessment. “Time-to-power is the true bottleneck,” he said. “But if you can figure out how to pull a rabbit out of a hat and get power resources up in the next few years, that’s going to be very valuable — because that’s very scarce.”

In the fourth and final part of this series, Canary Media reports on how flexibility can help the grid better handle data centers.

A handful of school buses in northern Illinois will soon have a new summer job.

ComEd is the latest utility to explore whether electric school buses could help manage the grid when school is out of session and air conditioners are humming.

Under such vehicle-to-grid, or V2G, arrangements, electric school buses charge up at night when power is cheap and plentiful, then discharge electricity to the grid when local power demand is high. This infusion can alleviate the need to fire up natural gas peaker plants, buy expensive power on the market, or even build new power plants.

The buses basically act as batteries attached to the grid, in a win-win situation where school districts are paid for the service and utilities get power that is cheaper and possibly cleaner than what they could otherwise acquire during peak hours.

V2G projects are still in nascent and pilot-project stages, and significant challenges exist. The grid needs to communicate seamlessly with the bus charging station, and operators must make sure bus batteries are ready when needed and that the grid isn’t overloaded by local bursts of energy. Standards and certifications for V2G technology and practice are also still in early stages.

“It takes a lot of effort to do those communications properly,” said Greggory Kresge, senior manager for utility engagement and transportation electrification at the World Resources Institute, a research and advocacy nonprofit focused on environmental and economic issues. “You have communication about speed, power level, how many kilowatts, how fast, what’s the duration — all these different packets of information going back and forth. It’s a fragile ecosystem. If one of those communication links breaks, it doesn’t function.”

ComEd has proposed a pilot project launching this spring and running through 2025 in partnership with the San Diego-based company Nuvve, which also has led V2G pilots in California, Delaware, and New York as well as in Europe and Asia. While the ComEd pilot will only involve four electric school buses in three different northern Illinois school districts, it could pave the way for widespread V2G in an area with hot summers, air pollution problems, and lots of students.

“The main idea is to look at this from a technology-demonstration standpoint,” said Sri Raghavan (Raghav) Kothandaraman, ComEd manager of emerging technology, smart grid, and innovation. “How does the charger work in connection with the bus? How does it work with the grid? How do we send commands to these chargers to be able to discharge during particular times? We’re trying to look at it from a win-win-win scenario for school districts and [electric bus] manufacturers as well as the utility.”

Kothandaraman said he could not say whether the buses are already owned by the districts or would be provided by ComEd. Nuvve CEO and cofounder Gregory Poilasne said the vehicles would be made by Blue Bird, one of the country’s leading electric-bus manufacturers.

Electric school buses cost about three times as much upfront as traditional diesel school buses, though the savings on fuel and maintenance can make the total cost of ownership lower over time.

School districts have had access to electric school bus funding from the Volkswagen emissions-cheating settlement and the Biden administration’s $5 billion Clean School Bus Program, but the federal initiative ends next year. Clean energy advocates say V2G programs could provide a new revenue source that makes electric school buses more financially viable for districts while slashing the air pollution and noise that students and drivers are exposed to with diesel buses.

A 2022 WRI report counted at least 15 utilities across 14 states with electric school bus V2G programs. Kresge noted that school districts can earn high payments for power from their buses during peak demand or “emergency load reduction program” times designated by utilities. In California pilot programs, utilities pay $2 per kilowatt-hour during emergency load reduction periods, whereas market prices in the state hover around 30 cents per kilowatt-hour.

Along with feeding the grid, electric school buses can act as behind-the-meter batteries that give schools emergency power during blackouts, Kresge said. In California, they can also power school buildings when utilities shut down transmission lines because of wildfire risk.

“We’re really looking at these buses as resiliency assets, for potential emergency backup power,” said Kresge. “You’re not powering an entire school but just the gymnasium or cafeteria,” which could serve as a community shelter during a disaster, he said. “If a tornado comes through, kids are not going to school, and the buses are available unless they got picked up and moved by the tornado.”

ComEd’s pilot is part of its Beneficial Electrification program, wherein state regulators required the utility to invest in vehicle electrification, including spending $5 million a year for three years on pilots to explore the most efficient and equitable ways to do so.

Kothandaraman said the participating school districts will likely be announced in the coming weeks after contracts are finalized. ComEd’s service territory includes Chicago as well as surrounding suburbs and several other cities. Last year, Chicago announced federal funding for up to 50 electric school buses for the district.

Poilasne noted that grid battery storage and innovations like V2G are increasingly necessary in part because of the extra demand that more and more electric vehicles will put on grids.

“We’re in an environment where for the first time in 25 years, the load on the grid is increasing, driven by heat pumps, data centers, [and] EVs,” Poilasne said. “It’s not just load increasing; it’s the volatility of these loads. The generation is volatile; the load is volatile. You need to design a system for peaks” that last a short time but can skyrocket electricity costs.

“The utilities love to upgrade infrastructure. That’s how they’re making money, but in this environment, they can’t just upgrade the system because the cost would be prohibitive,” Poilasne added. “It’s all about keeping the cost of energy equitable in this fast-changing environment.”

A school district participating in V2G has to install bidirectional charging stations, which are significantly more expensive than traditional ones. However, utilities may be willing to subsidize this infrastructure. Utilities additionally need to be able to handle two-way flow of power on their grids. This also happens when rooftop or other distributed solar panels send power to the grid, so utilities in solar-heavy states are especially prepared for this dynamic.

A third-party company like Nuvve typically provides the software and manages the charging stations for a school district, whether it is doing V2G or not.

“Vehicle readiness is the number one priority,” said Poilasne. “There’s a lot of work on forecasting when the EV will be there, when it will come back, what’s the level of charge when it comes back.”

WRI hosts a utility working group on V2G programs and advises that utilities structure rates specifically to make such initiatives more attractive for school districts. WRI also recommends school bus V2G programs prioritize communities with disadvantaged populations facing disproportionate air pollution, since electric buses can directly improve the air students breathe each day.

Even though school bus V2G programs are still small, Kresge thinks they will become commonplace and financially beneficial in coming years.

“We’ve been recommending if you have the opportunity to buy bidirectional-capable bus chargers, even if you’re not moving forward with V2G right at this moment, you should go ahead and get it,” Kresge said. “We’re anticipating that we’re going to see huge advancements and a lot more opportunity within the next four to five years on the technology side that will make [V2G] more scalable, more deployable. These programs are coming, so don’t hold back.”

This is the second article in our series “Boon or bane: What will data centers do to the grid?”

There’s no question that data centers are about to cause U.S. electricity demand to spike. What remains unclear is by how much.

Right now, there are few credible answers. Just a lot of uncertainty — and “a lot of hype,” according to Jonathan Koomey, an expert on the relationship between computing and energy use. (Koomey has even had a general rule about the subject named after him.) This lack of clarity around data center power requires that utilities, regulators, and policymakers take care when making choices.

Utilities in major data center markets are under pressure to spend billions of dollars on infrastructure to serve surging electricity demand. The problem, Koomey said, is that many of these utilities don’t really know which data centers will actually get built and where — or how much electricity they’ll end up needing. Rushing into these decisions without this information could be a recipe for disaster, both for utility customers and the climate.

Those worries are outlined in a recent report co-authored by Koomey along with Tanya Das, director of AI and energy technology policy at the Bipartisan Policy Center, and Zachary Schmidt, a senior researcher at Koomey Analytics. The goal, they write, “is not to dismiss concerns” about rising electricity demand. Rather, they urge utilities, regulators, policymakers, and investors to “investigate claims of rapid new electricity demand growth” using “the latest and most accurate data and models.”

Several uncertainties make it hard for utilities to plan new power plants or grid infrastructure to serve these data centers, most of which are meant to power the AI ambitions of major tech firms.

AI could, for example, become vastly more energy-efficient in the coming years. As evidence, the report points to the announcement from Chinese firm DeepSeek that it replicated the performance of leading U.S.-based AI systems at a fraction of the cost and energy consumption. The news sparked a steep sell-off in tech and energy stocks that had been buoyed throughout 2024 on expectations of AI growth.

It’s also hard to figure out whose data is trustworthy.

Companies like Amazon, Google, Meta, Microsoft, OpenAI, Oracle, and xAI each have estimates of how much their demand will balloon as they vie for AI leadership. Analysts also have forecasts, but those vary widely based on their assumptions about factors ranging from future computing efficiency to manufacturing capacity for AI chips and servers. Meanwhile, utility data is muddled by the fact that data center developers often surreptitiously apply for interconnection in several areas at once to find the best deal.

These uncertainties make it nearly impossible for utilities to gauge the reality of the situation, and yet many are rushing to expand their fleets of fossil-fuel power plants anyway. Nationwide, utilities are planning to build or extend the life of nearly 20 gigawatts’ worth of gas plants as well as delaying retirements of aging coal plants.

If utilities build new power plants to serve proposed data centers that never materialize, other utility customers, from small businesses to households, will be left paying for that infrastructure. And utilities will have spent billions in ratepayer funds to construct those unnecessary power plants, which will emit planet-warming greenhouse gases for years to come, undermining climate goals.

“People make consequential mistakes when they don’t understand what’s going on,” Koomey said.

Some utilities and states are moving to improve the predictability of data center demand where they can. The more reliable the demand data, the more likely that utilities will build only the infrastructure that’s needed.

In recent years, the country’s data center hot spots have become a “wild west,” said Allison Clements, who served on the Federal Energy Regulatory Commission from 2020 to 2024. “There’s no kind of source of truth in any one of these clusters on how much power is ultimately going to be needed,” she said during a November webinar on U.S. transmission grid challenges, hosted by trade group Americans for a Clean Energy Grid. “The utilities are kind of blown away by the numbers.”

A December report from consultancy Grid Strategies tracked enormous load-forecast growth in data center hot spots, from northern Virginia’s “Data Center Alley,” the world’s densest data center hub, to newer boom markets in Georgia and Texas.

Koomey highlighted one big challenge facing utilities and regulators trying to interpret these forecasts: the significant number of duplicate proposals they contain.

“The data center people are shopping these projects around, and maybe they approach five or more utilities. They’re only going to build one data center,” he explained. “But if all five utilities think that interest is going to lead to a data center, they’re going to build way more capacity than is needed.”

It’s hard to sort out where this “shopping” is happening. Tech companies and data center developers are secretive about these scouting expeditions, and utilities don’t share them with one another or the public at large. National or regional tracking could help, but it doesn’t exist in a publicly available form, Koomey said.

To make things more complicated, local forecasts are also flooded with speculative interconnection requests from developers with land and access to grid power, with or without a solid partnership or agreement in place.

“There isn’t enough power to provide to all of those facilities. But it’s a bit of a gold rush right now,” said Mario Sawaya, a vice president and the global head of data centers and technology at AECOM, a global engineering and construction firm that works with data center developers.

That puts utilities in a tough position. They can overbuild expensive energy infrastructure and risk whiffing on climate goals while burdening customers with unnecessary costs, or underbuild and miss out on a once-in-a-lifetime economic opportunity for them and for their community.

In the face of these risks, some utilities are trying to get better at separating viable projects from speculative ones, a necessity for dealing with the onslaught of new demand.

Utilities and regulators are used to planning for housing developments, factories, and other new electricity customers that take several years to move from concept to reality. A data center using the equivalent of a small city’s power supply can be built in about a year. Meanwhile, major transmission grid projects can take a decade or more to complete, and large power plants take three to five years to move through permitting, approval, procurement, and construction.

Given the mismatch in timescales, “the solution is talking to each other early enough before it becomes a crisis,” said Michelle Blaise, AECOM’s senior vice president of global grid modernization. “Right now we’re managing crises.”

Koomey, Das, and Schmidt highlight work underway on this front in their February report: “Utilities are collecting better data, tightening criteria about how to ‘count’ projects in the pipeline, and assigning probabilities to projects at different stages of development. These changes are welcome and should help reduce uncertainty in forecasts going forward.”